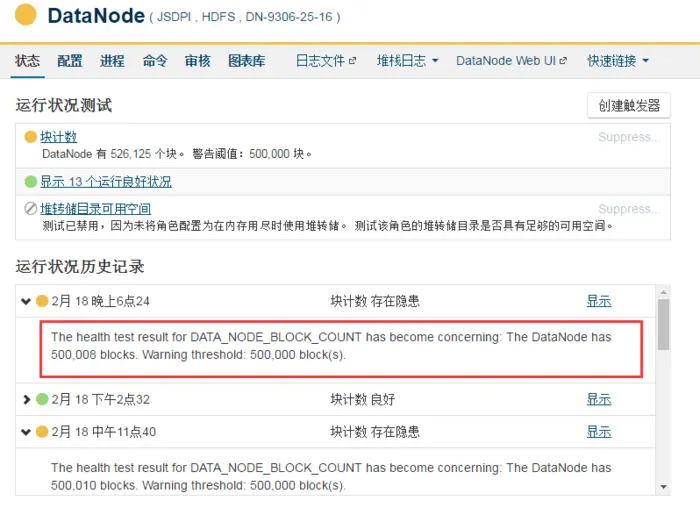

CDH健康检查报DATA_NODE_BLOCK_COUNT告警

告警原文:

The health test result for DATA_NODE_BLOCK_COUNT has become concerning: TheDataNode has 500,008 blocks. Warning threshold: 500,000 block(s).

CDH官网解释:

This is a DataNode health test that checks for whether the DataNode has too many blocks. Having too many blocks on a DataNode may affect the DataNode's performance, and an increasing block count may require additional heap space to prevent long garbage collection pauses. This test can be configured using the DataNode Block Count Thresholds DataNode monitoring setting.

网上的问答帖:

Having more number of blocks raises the heap requirement at the DataNodes. Thethreshold warning exists to also notify you about this (that you may need tosoon raise the DN heap size to allow it to continue serving blocks at the sameperformance).With CM5 we have revised the number to 600k, given memory optimisationimprovements for DNs in CDH4.6+ and CDH5.0+. You can feel free to raise thethreshold via the CM -> HDFS -> Configuration -> Monitoring section fields,but do look into if your users have begun creating too many tiny files as itmay hamper their job performance with overheads of too many blocks (andthereby, too many mappers).来源: http://community.cloudera.com/t5/Storage-Random-Access-HDFS/DATA-NODE-BLOCK-COUNT-threshold-200-00-block-s/td-p/1218610-07-2014 10:47 PMThanks for your response.I deleted useless HDFS files(3TB) yesterday(hadoop fs -rm -r), but warningmessege is still continuous.DATA_NODE_BLOCK_COUNT is same before deleting files. (current value is 921,891blocks)How can I reduce current DATA_NODE_BLOCK_COUNT?Even after a file is deleted, the blocks will remain if HDFS Trash is enabled. Do you have Trash enabled? It configured as stated in this URL:http://www.cloudera.com/documentation/archive/manager/4-x/4-8-6/ClouderaManager-Managing-Clusters/cmmc_hdfs_trash.html